Cost-Reducing Technologies

Integrating Technology & Operational Excellence Improves Efficiency, Lowers F&D Costs

By Alan R. Huffman, Ph.D.

HOUSTON–As 2015 begins, the oil and gas industry is facing another possible downdraft with steeply lower energy prices worldwide. Over the past few years, historic increases in production volumes in the United States along with sustained high prices have enabled operators to maintain momentum, despite significant increases in the cost of operations and third-party services.

The decline in prices has caused severe ripples across the business such that, if they continue for a sustained period, it will force operators and service providers to change their behavior to survive. The question of the moment is simple: How can the industry improve operational efficiency and lower full-cycle finding and development costs that will allow operators to weather the impending storm while increasing production, and also maintain the required safety and environmental safeguards?

The answer is through significant improvements in operational efficiency through the intelligent use of technology to lower total F&D costs without sacrificing health, safety and the environment.

In addition to the lower price environment, the technical challenges facing the business are getting more complex, and are very different for each major segment of the exploration, drilling and production business. The challenges in unconventional plays are different from those in the conventional deepwater, and ultradeep high-pressure/high temperature, plays around the world that represent the highest F&D cost profiles. While these segments of the business may be more vulnerable to price declines, the fact is that all segments can improve efficiency and lower the cost of operations through better integrating technology.

A detailed discussion of these challenges would require far more space than is available in this venue, but examples in each of the high-cost E&P segments will demonstrate how the business might lower its cost of operations and increase efficiency to maintain profitability in the face of current market conditions.

There is a constant stream of new technology in all high-cost plays–much of which has been discussed in AOGR’s annual Tech Trends issue in previous years. These include multicomponent and wide-azimuth 3-D seismic, higher channel counts on 3-D surveys, and new horizontal drilling and completion techniques, etc.

These technologies can make a difference in operations on a stand-alone basis, but they cannot by themselves effect the kind of cost savings that will enable operators to survive a prolonged downturn. The technologies must be integrated with critical processes such as real-time operations (RTO) monitoring methods, new sensors and continuous monitoring technologies, advanced communications systems, and multidisciplinary workflows that enable decisions to be made efficiently in real time to reduce the risk of cost overruns from unexpected events that drive up operation costs, ruin efficiency, and disrupt production processes.

Conventional Reservoirs

In conventional plays, the two highest-risk segments are deepwater and ultradeep HP/HT reservoirs (e.g., the deep Shelf gas play in the Gulf of Mexico). In deep water, new and more stringent safety requirements also require more sophisticated RTO and integrated operations management to assure that failures in judgment do not lead to another major offshore safety incident.

All operators–no matter how careful they are–still can be surprised by unexpected subsurface conditions that most commonly result in nonproductive time (NPT), and in the worst case, catastrophic failures.

The single biggest source of cost overruns in deepwater drilling is NPT from events that either could have been predicted a priori, or could have been recognized through the use of RTO methods and then mitigated before a serious problem developed. Such events include such things as the onset of very narrow drilling margins between the pore pressure and fracture gradient, rapid changes in rock strength caused by pressure and stress fields–which can result in massive lost returns during drilling–and wellbore stability in high-stress environments such as near salt bodies.

Safe and efficient deepwater drilling requires that predrill analysis and RTO monitoring be integrated into a process safety model that starts long before drilling commences and continues through the entire drilling and completions exercise. This requires that the people managing the operations also be integrated into teams that communicate constantly to assure that all stakeholders have the critical information required to do their jobs.

These same drilling operations issues apply in conventional HP/HT wells, but they are exacerbated by the additional complexities caused by the presence of very high temperatures. In these wells, the materials scientist must play a key role in developing new materials that can handle both the high temperatures and the corrosive chemical environments that are a natural byproduct of the HP/HT world.

In both deepwater and HP/HT operations, there ultimately will be a need for continuous monitoring of wells and fields using downhole sensors that can be deployed within casing and production strings so that the operator can monitor the reservoir constantly for anomalous changes that can spell trouble. For HP/HT reservoirs, these sensors will need to be capable of surviving the high temperatures and corrosive environments for the life of the well. Much like the electrocardiogram monitor that watches a sick patient in a hospital, such RTO systems can be designed to warn the operator when a critical reservoir parameter goes out of the acceptable range of values.

By linking these monitoring systems from the field or offshore platform to an operations monitoring center, the operator will gain redundancy in operational excellence, and can ensure that experts at the monitoring center can provide timely input to processes and actions undertaken at the field location.

Unconventional Reservoirs

For unconventional reservoirs, the challenge to improve operational efficiency must start with the realization that shale development is an extraction business that is more akin to a mining process, in some aspects, than it is to traditional oil and gas production. With this paradigm in mind, the primary question is how the extraction operation within the shale reservoir can be made more efficient and cost effective.

Given the price sensitivity of many shale plays, the goal of this exercise should be to enable production to increase while lowering the cost per flowing barrel so that capital budgets do not dry up and force the shale extraction operation to shut down when prices drop significantly.

One of the primary problems with the shale “factory” (process business) approach is that low prices often force operators to do exactly the opposite of what is required to survive a low price environment. Instead of integrating technology to increase production while lowering the extraction cost per barrel and optimizing reservoir management, operators often abandon the extraction process approach and revert to drilling individual wells to hold acreage as a delaying tactic.

This may seem like a good idea in the short term, but in the long term can result in significantly higher cost per flowing barrel and lower cash flow that ultimately will fail, if prices stay low for a prolonged period.

The key to optimizing shale performance is to achieve intimacy with the 3-D geomechanics of the target shale and then use this knowledge to design and implement an extraction process that maximizes the estimated ultimate recovery per volume of shale at the lowest possible extraction cost.

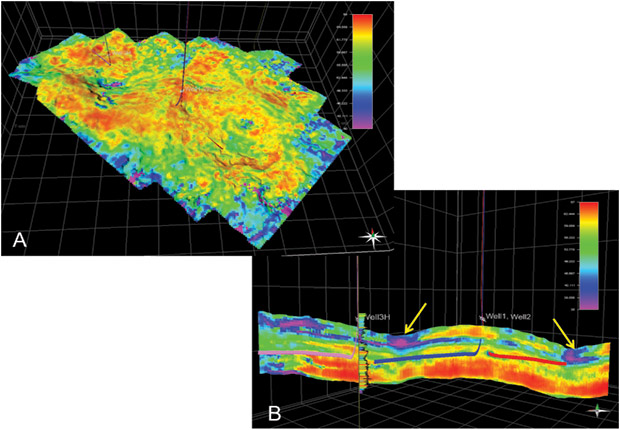

FIGURE 1

Brittleness variations in the Barnett Shale affect the performance of each well. This slice through a highly brittle zone (A) shows the lateral variations within the layer while the cross section (B) shows the changes within a vertical section of the shale. Note the ductile zones near collapse features (yellow arrows) that should be avoided.

Achieving this goal requires full integration of true 3-D seismic, well, drilling and stimulation data to optimize the earth model in highly anisotropic rocks. This requires that the traditional sources of 3-D information, including modern wide-azimuth 3-D data (P-wave and multicomponent where practical), well log data and geomechanical core measurements be integrated to provide the realistic modeling of the reservoir that will drive reservoir predictions and stimulation management of complex fractured reservoirs (Figure 1).

It is essential that the operator use the full acoustic wave field to predict reservoir behavior, including full anisotropic properties for P and S wave fields, and to scale those dynamic measurements to their static equivalents, which are essential for frac design and management. To be fully effective, this process of gaining intimacy with the reservoir must start at the log and core scale to build detailed, 3-D geomechanical models that can be upscaled for use in earth modeling while preserving the fully-anisotropic character of the rocks.

Microseismic

Once intimacy with the reservoir is achieved from the available 3-D predrill information, the next step is to use RTO methods during drilling, stimulation and production to optimize the extraction process and update the geomechanical earth model in real time. One area of technology that has expanded rapidly is using microseismic data collection and processing to help understand fracture propagation during stimulation.

There is a wide range of offerings from various microseismic vendors that include surface geophone arrays versus downhole sensors, earthquake location methods versus seismic migration location methods, and use of P waves versus P and S waves. While the efficacy of these different methods is beyond the scope of this article, it is safe to say the industry still is not using microseismic data to their full value because of several factors. These include questions about:

- Detecting events with surface versus downhole sensors;

- The accuracy of event locations in the subsurface;

- Timeliness of the delivery of event locations;

- Lack of integration of realistic earth models into the microseismic analysis process;

- Failure to use robust presurvey earth models for designing microseismic surveys; and

- Failure to properly integrate microseismic data into the actual stimulation process in real time.

There also is a fundamental lack of understanding about the relationship between the apparent microseismically stimulated reservoir volume (MSRV) defined by the microseismic event envelope, the effectively propped volume (EPV) created by the frac job, and the effectively propped volume of reservoir with sufficient permeability to allow hydrocarbons to flow into the well (the effective flow volume or EFV).

The most likely scenario is that the MSRV is much larger than the EPV, and the EPV is somewhat larger than the EFV. Knowing the answer to this question is absolutely essential to optimizing well placements and stimulation processes, and thus to the success of the reservoir optimization process.

Acquisition And Processing

To get the most out of the microseismic data acquisition during RTO, operators must consider several key factors. First, microseismic acquisition and processing in the field must be done intimately with the stimulation process. This means that the microseismic acquisition and real-time processing must be able to provide direct feedback to the engineers managing the frac job.

When done properly, this intimacy enables a higher level of adaptive fracture management, which allows the frac engineer to make better-informed RTO decisions that can dramatically improve stage by stage performance of every well so as to maximize production while minimizing stimulation cost. It also provides the information required to update the geomechanical model so that changes in the reservoir that occur during stimulation can be factored into the rest of the operation.

Second, the microseismic exercise must be designed with an acquisition geometry that will provide the most robust detection and location of microseismic events. Using surface geophones to detect P and S wave events, especially for deep reservoirs, is proving not to be feasible and leads to severe detection limits along with very significant errors in locating microseismic events.

While we will never have the luxury of the experimental geometry afforded to the medical profession (e.g., the CT scanner that surrounds the patient), we must start making measurements that:

- Have the correct geometry and aperture to detect multiple wave paths for the P and S wave events;

- Remove the severe challenges the near-surface creates for detecting smaller events, and for S-waves in particular; and

- Address the accurate dual detection of P and S waves in the subsurface.

This requires that the operator fully integrate the microseismic exercise into the field development plan so that nearby vertical wells can be drilled in patterns and used as deep observation wells during drilling and stimulation of horizontals within the grid of vertical wells that have been completed already.

Extraction Factory Model

The practice of drilling stand-alone observation wells needs to be modified to merge the microseismic exercise into a more efficient extraction factory model for shale development. This will be especially true in a lower price environment, where the stand-alone observation wells could become cost-prohibitive.

Taking this approach also will allow the operator to be more efficient in how he contracts microseismic services, so that a long-term monitoring approach can be used rather than the on-call approach, which is much less efficient and more costly over the long term. Tightly integrating microseismic field crews into the factory process also will improve the performance of microseismic acquisition and processing as the crew becomes intimate with the particular reservoir (Figure 2).

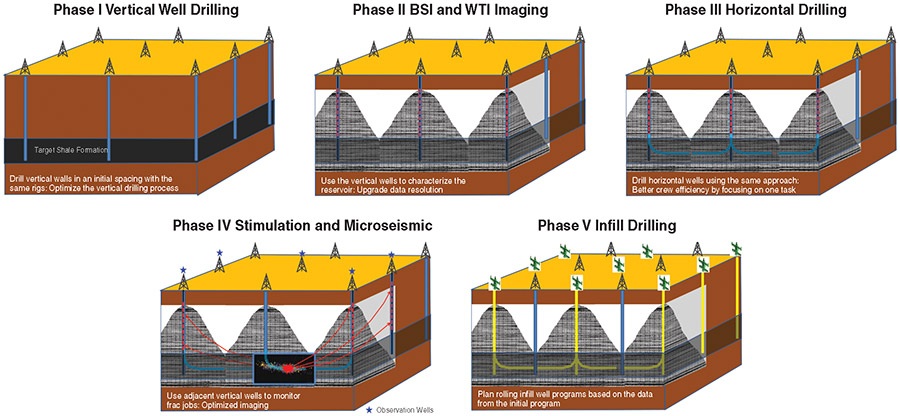

FIGURE 2

This 3-D field model demonstrates the shale factory approach where vertical wells are drilled in an initial pattern and then are used as observation wells during the development of horizontal wells.

Finally, the operator needs to start using dynamic reservoir models instead of the traditional static models that are standard in the business. In my experience doing shock wave research work, we had to model the world in Eulerian (deformed) coordinates versus Lagrangian (undeformed) coordinates because shock waves deform the rocks through which they travel (e.g., in explosions, nuclear tests and meteor impacts).

Now that operators are fracturing reservoirs intensely during stimulation, they need to start thinking about RTO updates to the initial prestimulation reservoir properties, which are changing dynamically with each completed frac stage because of the presence of new fractures and changes in the stress field.

The outdated approach of building an overly simple 1-D or 3-D earth model, and then assuming the location of microseismic events can be determined accurately within that simple model construct, is simply wrong! New tools are addressing this oversimplification of a very complex real earth. These tools will enable real-time updates to the 3-D geomechanical model of the reservoir as frac stages are completed, and will integrate the updated models into the microseismic experiment in real time so that errors in event locations can be reduced, where appropriate, based on the sensor geometry of the microseismic experiment.

Joint Acoustic Imaging

One area of technology that offers great potential for improving geophysical data is the joint acoustic imaging (JAI) concept.

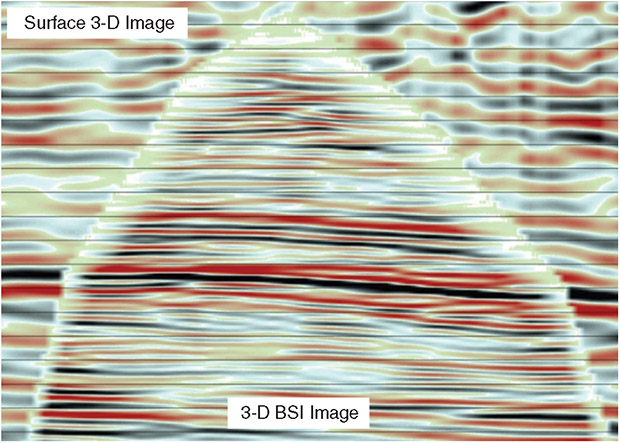

FIGURE 3

Integrating 3-D borehole seismic imaging technology in joint acoustic imaging improves subsurface resolution.

In many fields, the presence of existing wells creates the opportunity to collect 3-D borehole seismic imaging (BSI) data during 3-D surface acquisition using downhole geophone arrays. This approach can allow the full-azimuth anisotropic data, Q factor measurements, and 3-D imaging volume from the BSI survey to be integrated into processing the surface 3-D, which can improve results dramatically (Figure 3).

Where salt bodies are present, acquiring the BSI data in wells adjacent to or inside the salt also can provide critical information about salt body properties and geometry. This process will ensure that operators can build accurate and spatially-robust 3-D earth models with full accounting of the anisotropic rock properties that are essential to 3-D depth imaging, geomechanical modeling, depth conversion, geopressure prediction, and reservoir simulation and other critical processes.

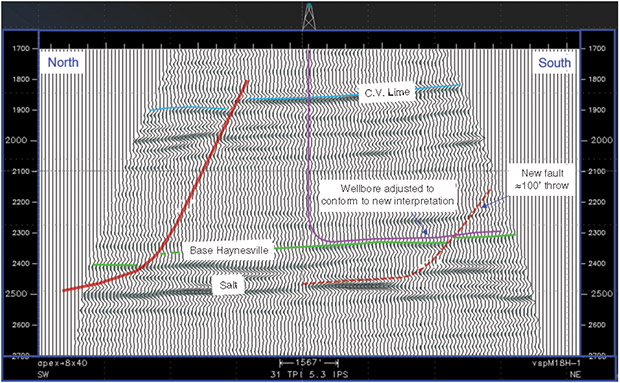

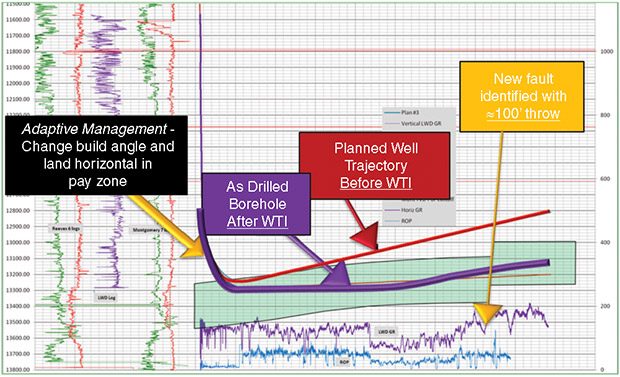

The power of the BSI imaging concept also can assist operators in achieving very accurate placement of horizontal wells in thin reservoir targets that can dramatically improve well performance (Figures 4 and 5).

FIGURE 4

Interpreting 2-D and 3-D borehole seismic imaging technology enables wells to be placed more accurately.

A logical extension of the JAI concept is to add potential fields, including gravity, magnetics and controlled-source electromagnetics (CSEM), to the integrated-imaging workflow to produce a joint wave field imaging (JWI) product.

The challenge for this exercise is multifold in that the industry has struggled to demonstrate that the lower resolution of most potential field methods can add value consistently over the traditional model of using only 3-D seismic data.

One area where electrical methods may have the advantage over acoustic methods is in monitoring fluid flow in the subsurface, because of the sensitivity of electric and magnetic (E&M) fields to fluid flow. The E&M geophysical community still has a lot of room to run with new developments that ultimately may confirm the value proposition behind JWI as a process. While developments in CSEM have been successful in marine environments by virtue of the background stability offered by the water column, it remains to be seen whether the same quality of results can be achieved in onshore environments, where the E&M ambient noise is much higher.

Passive Monitoring

Another technology that will be critical to the RTO concept in both conventional and unconventional reservoirs is passive monitoring of producing fields with permanent sensor arrays (surface and buried), along with smart well sensor arrays as discussed previously.

FIGURE 5

2-D borehole seismic imaging technology in conjunction with adaptive management while drilling improves final well placement.

Long-term monitoring of fields using acoustic and electrical methods is one of the highest potential areas for major improvements in operational efficiency because of its ability to detect reservoir and overburden changes in real time, and the resulting impact on decision making as well as the potential to reduce the manpower required to manage multiple complex assets.

The industry has operated “blindly” for most of its history, assuming that the reservoir and overburden were essentially static and unchanging over the life of a field. We now know that this view was wrong, and that the reservoir and the overburden undergo continuous changes in stress, fluid pressure, temperature, and state of compaction during production that can lead to well failures and negative changes to producing wells that increase the cost of production.

In addition to the changes that occur during production of reservoirs, the industry now is involved in carbon dioxide storage and injection in a large number of fields, both for tertiary recovery and for sequestration purposes. Changes in the regulatory environment surrounding these activities ultimately will require constant passive monitoring of CO2 injection projects because of the concerns (warranted or not) about seal breaches and leaking CO2 into the atmosphere or aquifer systems.

Similar rules ultimately may be required for underground gas and oil storage facilities as well. It will be essential that the industry get ahead of this evolution in the regulatory framework, or we will be told by outsiders to do this on their terms at some future date.

People Piece

The final piece of this complex equation is people. Operators have a tendency in low price environments to shed people as a cost-cutting measure, but the truth is that highly technically skilled people are the only real assets an oil company has, after its reserves. Integrating new technologies to reduce finding and development costs while increasing production can be achieved only by putting together the right expertise in integrated teams that can implement these production optimization processes.

Success will require not only that the right people be assembled, but that they are provided with the right workflows and communication tools to enable the integration process. This also will require training and mentoring to help staff learn and master the technologies and processes they must manage.

In summary, the near-term price environment requires that operators consider a much tighter integration of specific technologies with production optimization processes. This integration can be achieved by identifying technology and service providers that are best-in-class in their specific fields, and then designing long-term partnerships with financial commitments designed to improve integration and intimacy of the technology and workflows, enhance efficiency, and lower the total cost of implementation.

Achieving this will allow operators to continue to grow production in order to maintain cash flow while enabling significant cost reductions in production operations to offset the lower price environment. Based on data from both conventional and unconventional plays, the potential exists to improve F&D costs per flowing barrel by 25-30 percent in many areas, which should be sufficient to offset commodity price declines and still increase production.

ALAN R. HUFFMAN is a consulting partner at SIGMA3 Integrated Reservoir Solutions, where he is a recognized expert in the fields of geopressure prediction, shallow hazards prediction, direct detection of hydrocarbons and exploration risking, with more than 25 years of experience in international exploration and production. He served as SIGMA3’s chief technology officer from its inception to 2014, and prior to that he was chairman and chief executive officer of FusionGeo Inc., where he was the primary architect of Fusion’s growth from a small consulting practice to a global business enterprise with more than 300 clients and offices in multiple countries. From 1997 to 2002, Huffman managed the Seismic Imaging Technology Center with Conoco. He received a bachelor’s in geology from Franklin & Marshall College in 1983, and a Ph.D. in geophysics from Texas A&M University in 1990.

For other great articles about exploration, drilling, completions and production, subscribe to The American Oil & Gas Reporter and bookmark www.aogr.com.